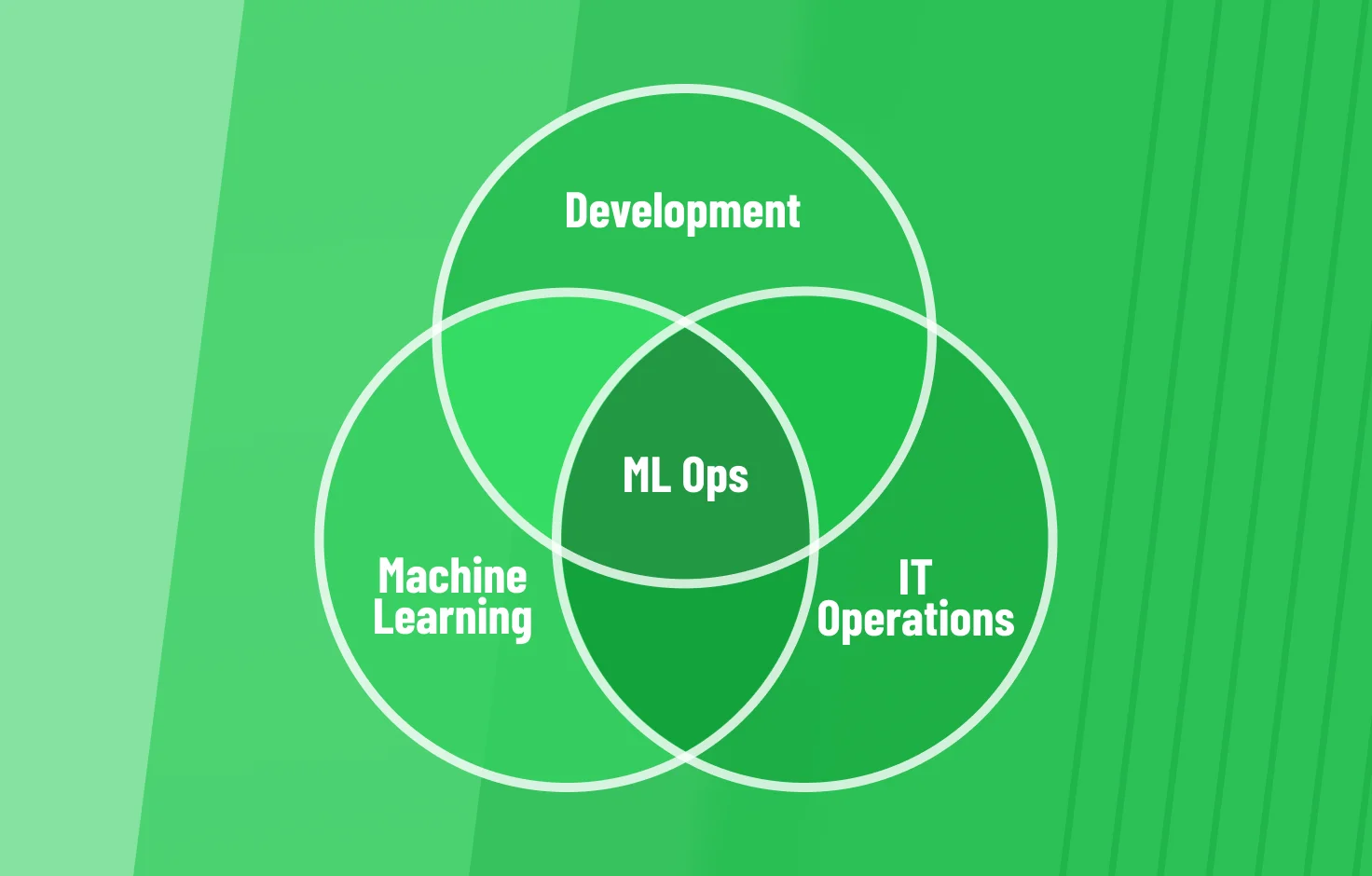

Artificial Intelligence today often begins with experiments, including proofs of concept, pilots, and models built in notebooks. However, without a clear strategy for operationalizing them, many models never make it into production. Or, if they do, they fail to scale. MLOps (Machine Learning Operations) bridges data science and IT/DevOps to enable reliable deployment and operations.

MLOps solves the problems that emerge when trying to put working models into production: messy data pipelines, ad hoc scripts, a lack of monitoring and versioning, and frequent surprises in real-world usage. Without MLOps practices, AI initiatives are more likely to underdeliver, cost more to maintain, or stall.

Why Is MLOps Necessary?

Many organizations succeed in building AI prototypes, but stumble when trying to operationalize them at scale. Models working in a lab environment often collapse under real-world data, fragmented tools, and inconsistent processes. Without a structured approach, companies face mounting costs, unreliable outcomes, and frustrated teams. In this case, MLOps proves essential: it transforms AI from an experimental exercise into a repeatable, dependable business function.

Scaling AI introduces a distinct set of challenges. Key among them:

- High manual overhead: Data scientists spend excessive time on data prep, labeling, reworking pipelines, debugging, and maintenance rather than creating new features.

- Tooling fragmentation: Different teams use different frameworks, such as TensorFlow, PyTorch, scikit-learn, or different cloud/on-premises infrastructures, leading to integration issues and inconsistent performance.

- Lack of standards and governance: No consistent versioning of data, models, or tracking of experiment metadata; model drift goes unchecked; retraining is manual and often delayed.

- Reliability and reproducibility fail: What works in the lab fails in production due to differences in runtime, data volume, or deployment environment

What recent surveys show:

- A survey by TMMData & Digital Analytics Association found that nearly 40% of data professionals spend more than half of their workweek collecting, integrating, and preparing data rather than analyzing it.

- Research from “Resource Realities” reveals that 60-68% of data practitioners report significant time wasted due to maintenance, rework, and misalignment with business requirements.

These statistics underscore two risks: lost agility and rising technical debt. Without structured operations, budgets tend to skew toward maintenance rather than innovation.

How Can MLOps Solve Problems?

MLOps is a discipline built on principles that bring structure and resilience to the entire machine learning lifecycle. By embedding automation, enabling seamless integration across platforms, and ensuring scalability and reliability, MLOps turns fragile prototypes into robust, production-ready systems. These principles lay the foundation for sustainable AI adoption, making advanced technologies manageable and measurable in business terms.

| Principle | What it enables | Key benefits |

| Automation | Full pipeline from data ingestion → labeling → model training → deployment → monitoring/retraining. | Fewer manual errors, faster iteration, reduced operational cost. |

| Integration | Unified workflows and toolchains: experiment tracking, model registries, CI/CD, infrastructure management. | Reduced friction between teams, consistent environments, and better reproducibility. |

| Scalability and reliability | Handling multiple models, scaling inference, coping with real-world variabilities (data drift, latency). | Stable service levels, ability to update, version, rollback, and maintain SLAs. |

Automation

MLOps automates many steps in the ML lifecycle:

- Data collection and labeling: Automated data pipelines, semi-automated labeling (or human-in-the-loop (HITL) systems) reduce delay and inconsistency.

- Model training and testing: Continuous training and validation, automated hyperparameter tuning; rigorous test suites to prevent regressions.

- Deployment and monitoring: CI/CD for model deployment, drift detection, automatic rollback when performance degrades, latency monitoring, throughput, and accuracy.

Automation significantly reduces human error; some workflow automation statistics indicate an error reduction of up to ~80%, depending on the domain.

Seamless integration

A common pain point: data scientists have models locally, perhaps in notebooks, but these models don’t fully match what is expected in production. Tools and platforms vary; integration between training, serving, and monitoring is often ad hoc. MLOps seeks to unify this ecosystem:

- Use of experiment tracking tools (e.g., MLflow, Weights & Biases (W&B), model registries, and versioned datasets.

- Standardized deployment pipelines across cloud/on-premises or hybrid setups.

- Infrastructure as code (IaC), containerization, and orchestration (e.g., Kubernetes), plus a microservices architecture, ensure environment consistency.

In a maritime safety program, a microservices architecture with contract-based interfaces enabled parallel teams and enforceable standards across data, models, and code.

Scalability and reliability

It might be manageable when you have one model serving predictions for test data. When you have dozens or hundreds in production, different versions, serving in different regions, and variability in inputs, you need robust mechanisms:

- Model versioning and rollback.

- Monitoring of model performance degradation (e.g., AUC, F1), data drift and concept drift.

- Automated retraining when performance drops.

- Infrastructure autoscaling during inference (e.g., autoscaling).

These practices ensure AI systems remain reliable as usage increases and conditions evolve.

Why the Business Side Matters

Technology alone is not enough. Companies invest in AI not for the sake of models, but for measurable impact: faster decision-making, leaner operations, happier customers, and competitive positioning. Without operational discipline, even the most innovative models remain research artifacts. MLOps creates the foundation to capture business value, moving AI from experimental to essential.

Here’s how it translates into business benefits:

Reduced time-to-market

Traditionally, moving a model from notebook to production can take months. MLOps accelerates this process through automated pipelines and CI/CD, allowing teams to deploy models in days or weeks instead.

For businesses, this speed means:

- Faster delivery of new customer-facing features.

- The ability to experiment, fail, and pivot more quickly.

- Staying ahead of competitors by iterating in real time rather than waiting for lengthy IT rollouts.

A bank adopting MLOps, for example, can deploy a fraud-detection update immediately in response to new attack patterns, reducing losses and enhancing customer trust.

Resource savings

Manual tasks such as log analysis, dataset cleaning, or patching deployment scripts consume valuable engineering time. MLOps reduces this overhead with standardized workflows and automation, freeing teams to focus on innovation instead of firefighting.

For organizations, this translates into:

- Lower operational costs due to reduced manual intervention.

- Less downtime and fewer production errors, which often carry hidden costs.

- A leaner team that delivers more output, optimizes payroll, and invests in infrastructure.

Case in point: Companies have reported material infrastructure savings after introducing automated retraining pipelines; magnitude depends on workload and cloud economics.

Improved quality and trust

MLOps embeds continuous monitoring, testing, and validation into every stage. Instead of waiting for customer complaints to reveal model drift, the system detects it early and retrains automatically.

The result:

- More reliable predictions that customers and stakeholders can trust.

- Transparent audit trails are crucial in industries like healthcare and finance.

- Compliance with regulations and governance frameworks that demand explainability and accountability.

High quality builds confidence in AI adoption across the organization.

Operational stability and scalability

As businesses grow, the number of models multiplies. MLOps ensures stable, scalable operations, with:

- Autoscaling infrastructure to handle surges in requests.

- Version control to allow safe rollbacks if performance dips.

- Consistent service-level agreements (SLAs) and objectives (SLOs) across geographies, with clear service-level indicators (SLIs) for latency and availability.

This stability allows organizations to trust AI as a dependable layer of their digital strategy rather than a fragile experiment. For example, scaling predictive maintenance models plant-wide in manufacturing ensures consistent uptime and productivity gains.

Better collaboration and ROI

Perhaps the most overlooked benefit of MLOps is cultural: it bridges the gap between data science, engineering, and business. Teams collaborate more effectively with unified pipelines, standard metrics, and shared visibility.

The outcome is a higher ROI:

- Stakeholders see more precise alignment of AI projects with business objectives.

- Cross-functional silos dissolve, accelerating delivery cycles.

- Resources are invested in models that deliver measurable value, not vanity projects.

The financial return compounds over time: faster launches, fewer failures, and smarter allocation of people and infrastructure.

Real-World Case Studies of MLOps in Action

Here are some illustrative examples of MLOps in practice and how different organizations adopted it:

| Organization/Domain | Challenge | MLOps solution | Impact |

| Hospitality | Managing a large inventory of models across properties and geographies was costly and error-prone. Maintenance consumed disproportionate resources. | Implemented end-to-end MLOps: automated training and deployment pipelines, integrated monitoring dashboards, and governance policies. | The company achieved ~40% cost savings annually and boosted developer productivity by ~55%. Beyond numbers, this meant less firefighting, more innovation, and faster rollout of personalized guest services and improved customer experience. |

| Maritime | Multiple teams worked in silos, using different frameworks and inconsistent standards. Lack of governance led to delays and fragile systems. | Adopted a microservices architecture with strict contracts between data, models, and services. Applied MLOps practices to unify deployments and monitoring. | Achieved scalable, maintainable architecture that reduced integration errors, improved collaboration, and allowed researchers to focus on solving maritime safety challenges instead of debugging pipelines. |

| Data Professionals Survey | Analysts and engineers spent most of their time on routine, repetitive tasks like prepping data, instead of strategic work. | Organizations adopting MLOps introduced automated data ingestion pipelines, standardized preprocessing, and experiment tracking tools. | Freed up significant analyst time, enabling teams to focus on building new models. Productivity gains led to faster business insights and greater employee satisfaction, reducing burnout and turnover. |

Practical Steps to Get Started with MLOps

Implementing MLOps may initially feel overwhelming, especially for organizations just beginning their AI journey. The good news: the path doesn’t have to be disruptive. With the proper framework, companies can gradually introduce automation, build governance practices, and align data science with IT operations. By breaking down the process into clear steps, organizations can adopt MLOps pragmatically, reducing risks, building internal confidence, and laying the groundwork for long-term AI success.

Thinking about adopting MLOps? Here’s a roadmap:

- Audit your current ML lifecycle — map how you collect data, how many models you train, how you deploy them, and what monitoring you have.

- Standardize tools and processes — choose experiment tracking, model and dataset versioning (e.g., MLflow/DVC), a model registry, a feature store, and CI/CD.

- Automate data pipelines, training, evaluation, deployment, and monitoring with gated approvals and rollback policies.

- Set up governance and monitoring — define metrics, drift detection, governance policies, including data privacy.

- Build culture and skills — data scientists, ML engineers, operations, and business stakeholders must align on expectations, quality, and roles.

Conclusion

In a world where innovation cycles are accelerating rapidly, MLOps is the backbone of any serious AI initiative. Organizations that master MLOps gain stability, speed, quality, and cost-effectiveness. They move from proofs of concept to production systems that continuously adapt, scale, and deliver business value.

MLOps is an operational culture that demands discipline, collaboration, automation, and governance. Integrating MLOps into strategy is essential for businesses that want to unlock AI's true potential.

At Emerline, we specialize in AI/ML development and MLOps practices that help enterprises transform experimental models into robust, production-grade systems. Let us guide your AI journey! Explore our AI and Machine Learning solutions today.

Published on Oct 24, 2025