A culture benefits platform delivering an immersive employer experience, contributing to better recognition of HR work, and improving the overall company’s image.

Custom BI Solution Development for Transparent Workforce Utilization

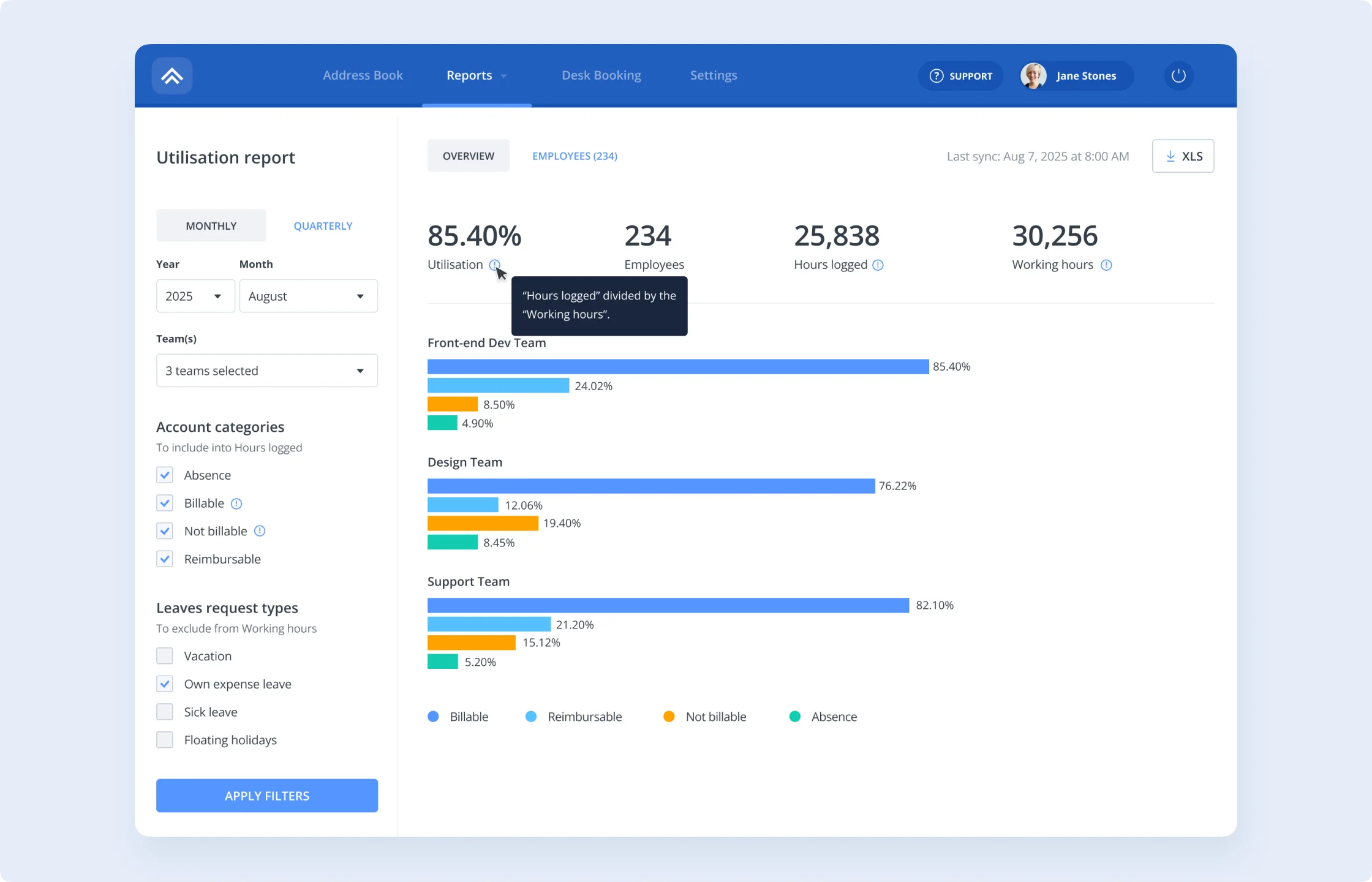

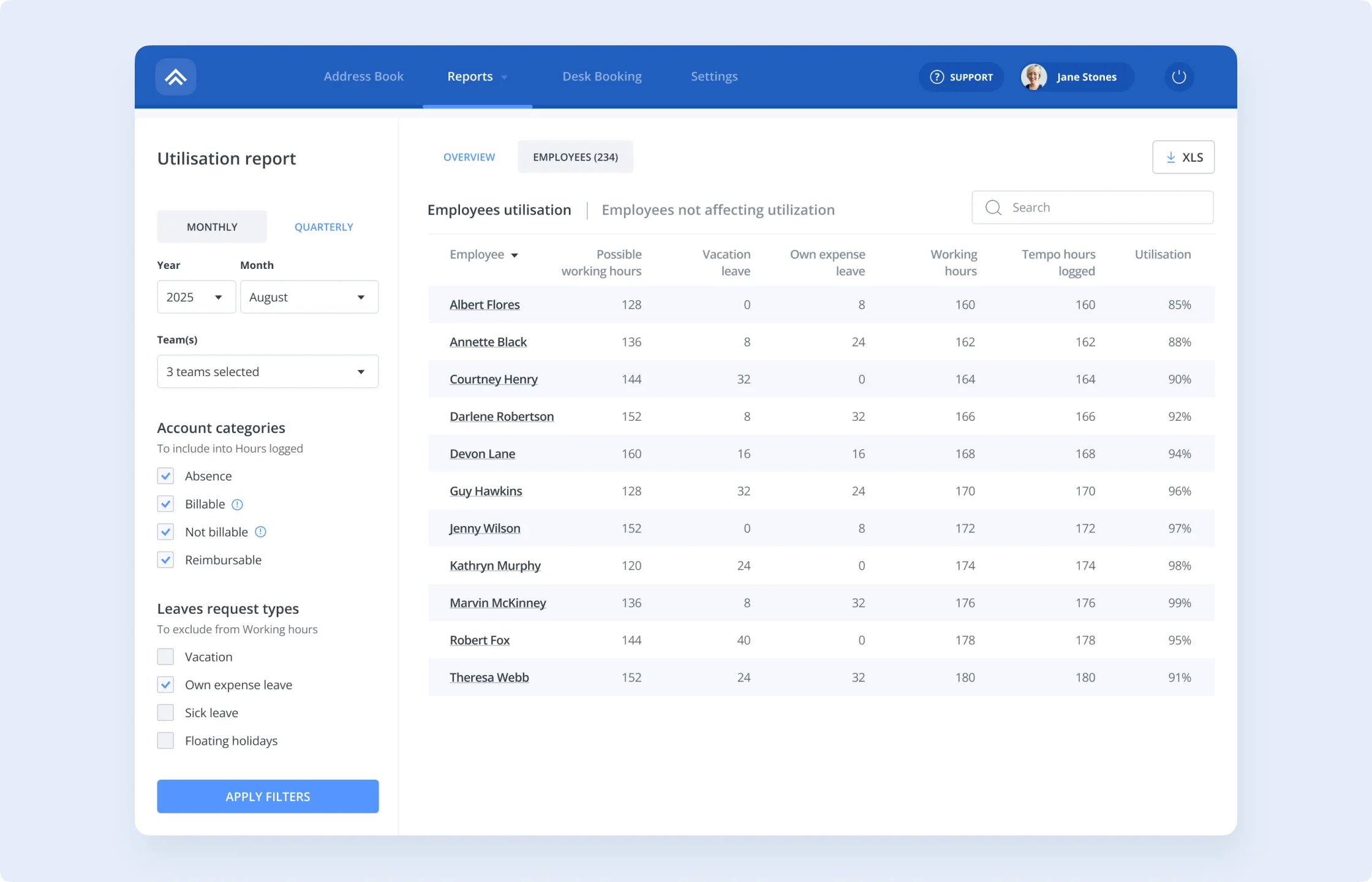

Emerline created an analytical solution that provided transparent monitoring of labor resources and enabled the optimization of key business processes: planning, project management, human resources, and finance.

Background

Our client is a large consulting company with more than 10 offices in 15 countries. It initiated a project to implement a unified analytical system to enhance transparency in labor resource utilization. This was particularly crucial, as the company's resources are simultaneously involved in over 40 projects. The solution was intended to provide transparency across all seniority levels of employees.

Preconditions leading to the need for change:

- Manual reporting management

Before the new solution, data collection and analysis regarding staff workload were performed manually. This process was extremely labor-intensive, slow, and prone to errors, leading to outdated and inaccurate data on the actual availability and occupancy of teams.

- Billing and project planning issues

The lack of precise workload information directly hindered accurate client billing and efficient planning of new projects. The company could not allocate resources timely, assess profitability, or adequately manage client expectations, resulting in financial losses and reduced service quality.

- Management and financial "pain points"

Accumulated inaccuracies and delays in data created serious problem areas at both the individual project level and in strategic financial management of the company. It became evident that a qualitatively different, more accurate, and fully automated approach to labor cost analytics was required.

- Challenges with geographically dispersed teams

Given the company's global structure, processing resource utilization data from geographically dispersed teams presented a unique challenge. The necessity to account for local calendars, public holidays, and various time zones made manual reporting unification practically unfeasible, preventing the formation of a unified, reliable picture of overall staff workload.

These factors collectively demonstrated a critical need for a unified, centralized analytics system capable of ensuring transparency and control over the company's key asset – its employees.

Methodology

Goal definition and in-depth problem analysis

Architecture evaluation and design

Collaborative development and seamless data integration

Quality assurance, deployment, and optimization

Solution

We developed a comprehensive analytics platform that provides automated and transparent monitoring of labor resource utilization. This specialized BI solution enables deep analysis and categorization of labor costs, providing management with consolidated and reliable information for strategic decision-making.

Key functional and technological features of the solution:

Business process automation

The solution provides transparent monitoring of labor resources and enables the optimization of key business processes: planning, project management, human resources, and finance. As a result, it automates critical operations, including time tracking, comprehensive analysis of labor resource utilization, internal reporting, and strategic planning. This replaces manual and inefficient methods that previously required significant effort, substantially increasing operational efficiency.

Functionality modules

- Data ingest: seamless integration with various data sources, including Tempo Jira and internal systems, was implemented via Airbyte for efficient information loading. Airbyte deployment were managed via the Airbyte Terraform provider, allowing integration into GitLab pipelines.

- Data storage: the platform used Amazon S3 as the foundational storage layer, paired with Apache Iceberg as the table format. This setup allowed storing and managing large volumes of semi-structured data (e.g., JSON) with full ACID guarantees, schema evolution, and time travel support. It enabled both developers and analysts to query historical snapshots, track changes over time, and work reliably with evolving data structures.

- Compute: the solution used Amazon Athena as the primary query engine, handling both scheduled dbt transformations and interactive analytical queries. Its seamless integration with Apache Iceberg enabled direct access to the data lake without additional ETL steps. Being fully serverless, Athena required no infrastructure management and operated on a pay-per-query basis — reducing DevOps overhead and ensuring cost-effective scalability for both engineering workflows and BI workloads.

- Data transformation: dbt (data build tool) was used to define and document transformation logic using SQL. Its native integration with Git enabled clean collaboration, version control, and code reviews across the team.The project also leveraged dbt’s built-in lineage tracking, which provided both the development team and end users with a clear view of model dependencies, column-level metadata, and descriptions — effectively serving as a lightweight, self-updating data catalog.

- Data quality: Great Expectations is integrated into the pipeline to validate critical datasets and enforce data quality rules. Expectations are run automatically during transformation steps, allowing early detection of anomalies and ensuring trust in downstream analytics.

- Orchestration: Apache Airflow was used to orchestrate ingestion, transformation, and data publishing. To reduce manual effort, Astronomer Cosmos was used to automatically convert dbt models and tags into Airflow tasks, simplifying DAG creation and ensuring consistency between transformation logic and orchestration.

- Monitoring and alerting: Amazon CloudWatch is used to track pipeline execution, log application events, and trigger alerts based on failure conditions or performance anomalies. This ensures visibility into operational health and helps resolve issues proactively.

- Dashboards: intuitive JavaScript-based dashboards were developed, providing comprehensive visualization of key resource utilization metrics and enabling quick management decisions.

- Data export: for user convenience, data export functionality to Excel is provided.

Lakehouse architecture

The solution is built on a modern Lakehouse architecture, utilizing Amazon Athena for high-performance SQL analytics and Apache Iceberg table format on Amazon S3 for scalable and cost-effective storage of large data volumes. This ensures flexibility and the ability to work with various data types.

Information processing volume

The system processes over 250,000 records monthly and stores 2 million historical records in JSON format, including time logs and metadata. This guarantees a complete analytical picture.

Information security measures

Strict measures were implemented to ensure data security, complying with the company's internal policies. SSO authentication (Single Sign-On), data encryption, Role-Based Access Control, and data anonymization at the storage level are applied for maximum protection of confidential information.

Scalability

The solution is designed to support over 500 users, ensuring stable operation under continuously growing loads.

Technology Stack

The solution is built on a robust and scalable technology stack, ensuring efficient data processing and visualization.

Results

The implementation of the solution brought significant measurable business benefits to the client, fully aligning with the set goals:

- Increased transparency in resource utilization: internal management gained transparent and consolidated analytics on labor resource utilization, which was previously impossible with manual reporting.

- Automated reporting: manual reporting processes were entirely replaced by an automated solution, significantly increasing efficiency and reducing labor costs.

- Improved billing and planning control: thanks to accurate data obtained through the new reporting system, the client was able to significantly improve control over project billing and optimize resource planning.

- Successful project execution: all technical requirements for the solution were fully met.

- Economic efficiency: the solution was optimized for low maintenance costs, ensuring its long-term profitability.

- Adherence to deadlines and budget: the project was successfully implemented within the established deadlines (1.5 months) and budget.

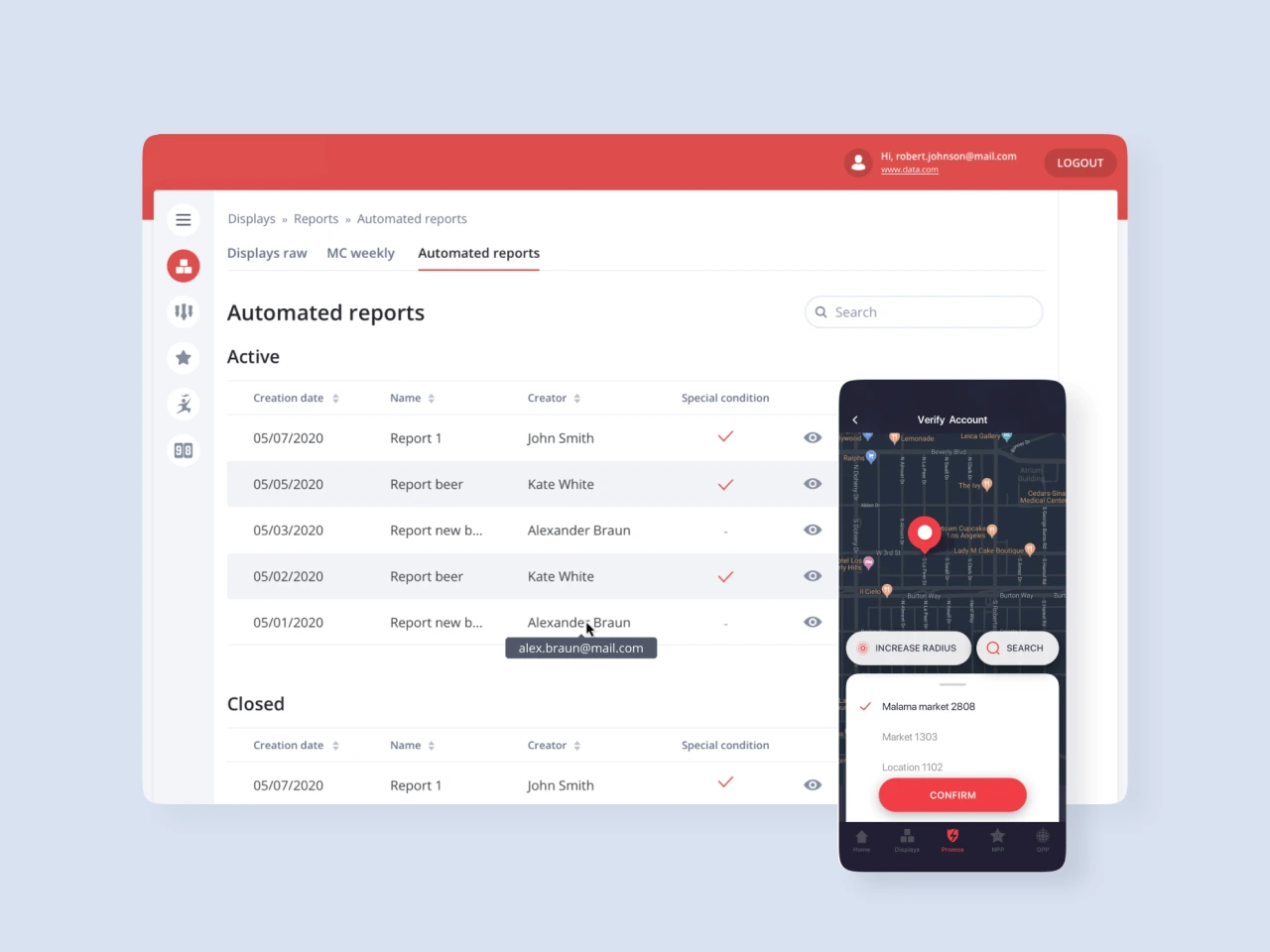

The development of an extensive sales analytics platform with a variety of modules aimed at facilitating the work of distributors and maximizing their performance.

Merchant recognition solution based on ML.