Emerline developed iOS and Android apps to help their customers reach a broader audience of clients, while complementing its web solution with mobile alternatives.

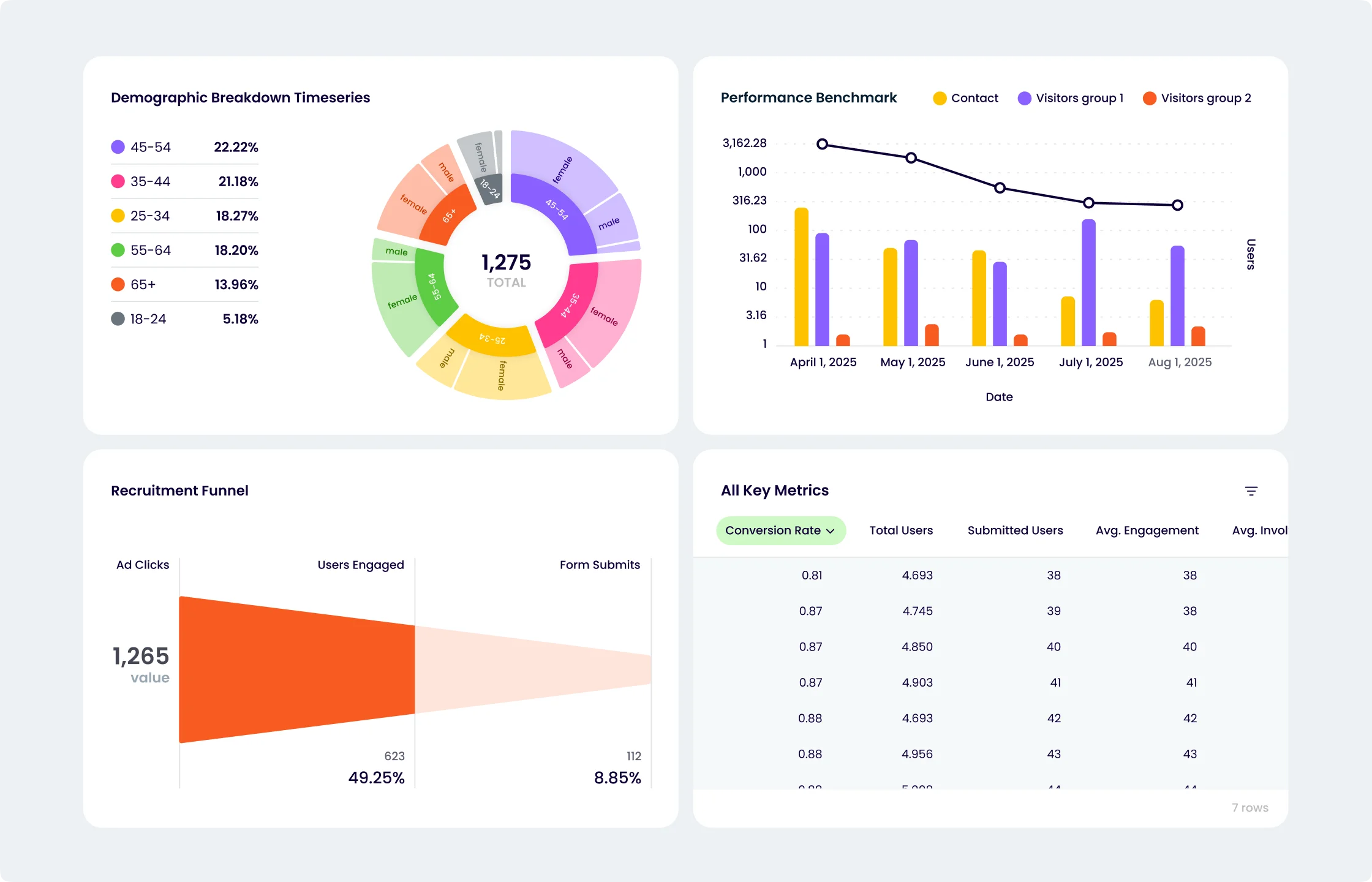

Scalable Analytics Platform for Digital Healthcare

Emerline developed and implemented a scalable analytics platform for a healthcare company, enabling them to provide transparent reporting and real-time analytics to their clients.

Client and Challenge

Our client is a Scandinavian innovator operating in the digital healthcare industry. The company offers technology solutions for collecting information from various advertising sources (Facebook Ads, Google Ads, social media) and user activity on their website (events), and then creates dashboards to compare user activity and advertising campaigns. The end-users of the developed solution are the company's own clients.

When the company approached Emerline, it faced a number of critical challenges that threatened its ability to scale and effectively serve its clients:

- Extreme scalability requirements

The primary challenge was the need to create a solution capable of handling the current user base and seamlessly scaling 10x by year-end and 100x within a few years, without requiring significant additional DevOps or development resources.

- High data ingest and analytics costs

The client needed a solution for collecting data from various advertising sources and user activity, as well as creating dashboards for comparing this data, but with strict limitations on the total project cost and its subsequent support. This necessitated critical decisions regarding tool selection, especially self-hosted Metabase (as a BI tool) and AWS Athena (as a database for the BI tool), based on their cost-effectiveness.

- Complexity of data security and GDPR compliance

In the healthcare and digital marketing industries, working with user data required strict implementation of Row-Level Security (RLS) and full compliance with the General Data Protection Regulation (GDPR). This posed a significant challenge within the chosen architecture.

- Disparate data sources and lack of unified analytics

Information came from numerous disparate advertising platforms and user behavior analytics systems (Posthog), creating difficulties in data unification and obtaining a comprehensive picture of campaign effectiveness.

Methodology

Phase 1: Requirements definition and cost analysis (cost-optimization focus)

Phase 2: Architecture designed for scalable growth

Phase 3: ETL and data transformation development

Phase 4: Security and compliance implementation

Phase 5: Deployment and monitoring

Justification for Technology Stack Selection

The choice of this technology stack is driven by the aim to ensure high performance, scalability, flexibility, and cost-effectiveness of the solution, as well as to accommodate the specifics of working with large volumes of distributed data:

- Amazon Athena and Amazon S3 (Lakehouse Architecture)

This combination creates a Lakehouse architecture that allows storing vast amounts of raw data in S3 (cost-effective and scalable object storage) and directly querying it using standard SQL via Athena without the need to move data into a traditional data warehouse. This provides flexibility, reduces ETL costs, and allows working with various data formats (JSON).

- Airbyte

Airbyte was chosen due to the need for efficient and seamless data loading from various, including internal, sources. Airbyte offers an extensive library of connectors, simplifying integration and reducing data pipeline development time.

Collectively, this technology stack allowed for the creation of a flexible, scalable, and cost-effective solution capable of processing large volumes of data, ensuring high transparency, and supporting complex analytics for geographically dispersed teams, fully meeting the client's stated business objectives.

Solution

We developed a comprehensive analytics platform that automates the entire client reporting process — from data ingestion and processing to visualization. This enables our client's customers to gain on-demand access to service performance information, demonstrating value, ensuring transparency, and facilitating data-driven decision-making.

Key functional modules and technological features of the solution:

Data integration

Data processing and transformation

Embedded analytics and visualization

High scalability

Cost-efficient BI access via multi-catalog architecture

Row-level security (RLS) and GDPR compliance

Technology Stack

The solution is built on the AWS cloud platform using Databricks, ensuring high performance, scalability, and security for big data analytics:

Results

Upon successful completion, the project demonstrated cost-effectiveness, flexibility, and scalability, fully meeting the client's key requirements.

Key achievements at the current stage:

- Real-time metrics access

Ensured fast, on-demand access to analytical metrics, which significantly accelerated the process of gaining insights and making decisions.

- Team unification

A Single Source of Truth was established, which unified team workflows and ensured data consistency.

- Data management centralization

Data management was centralized for multiple business units, ensuring data consistency and accuracy across the entire organization.

- Scalability

The solution's architecture, built on Databricks on AWS, can handle a 100x increase in the user base without requiring significant additional resources, enabling business growth without technological limitations.

- Cost-effectiveness and flexibility

The solution demonstrated high cost-effectiveness and flexibility, fully meeting the client's key requirements and staying within budget constraints.

- Reliable data architecture

Data engineering and the implementation of ETL processes on Databricks were successfully completed, providing a reliable and stable foundation for the platform's further development.

- Support and maintenance

Comprehensive documentation was provided and training was conducted for the seamless implementation and ongoing operation of the platform.

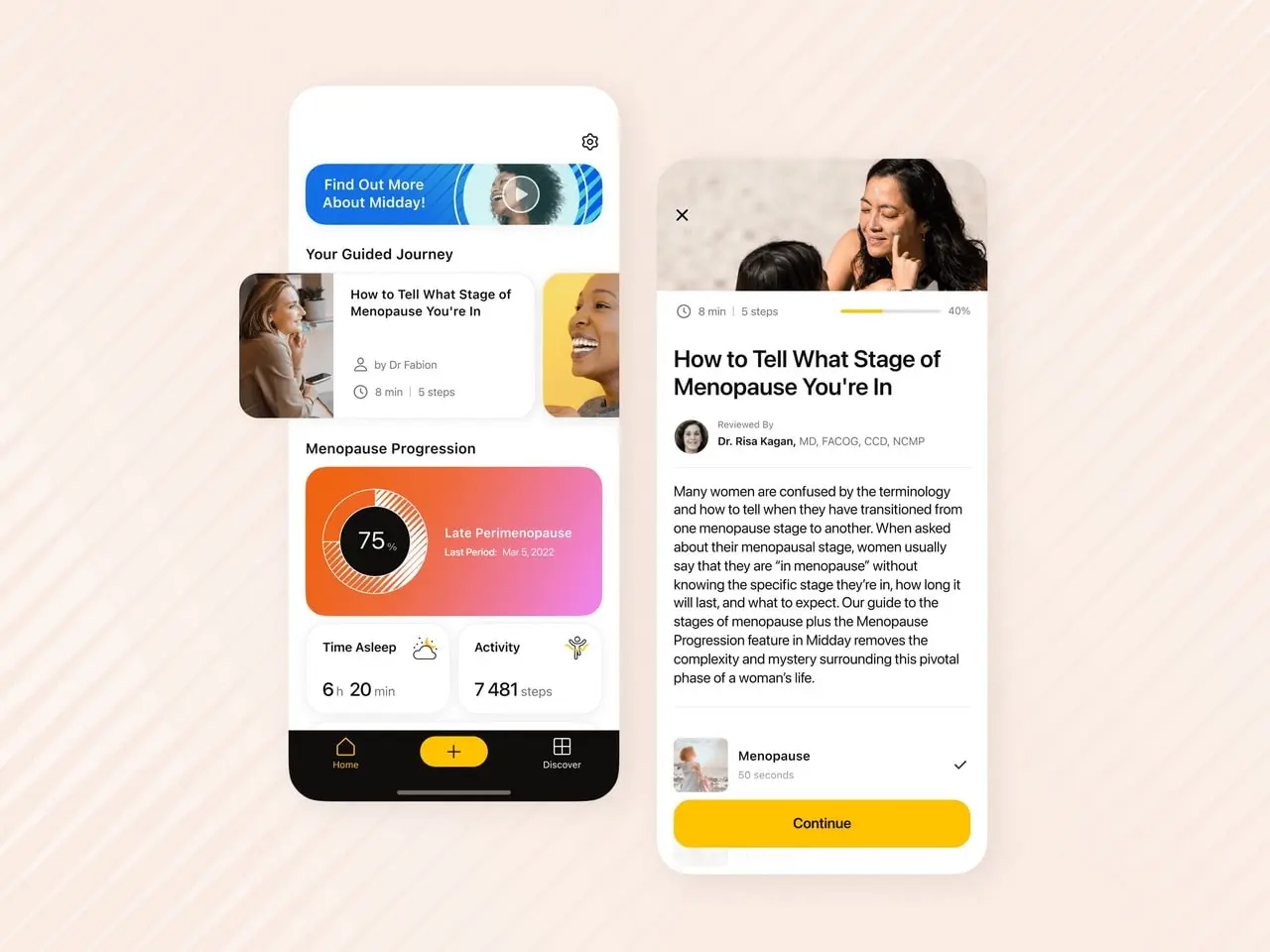

Advanced women's health app that combines a scientific approach with data analytics to help manage menopause symptoms comfortably.

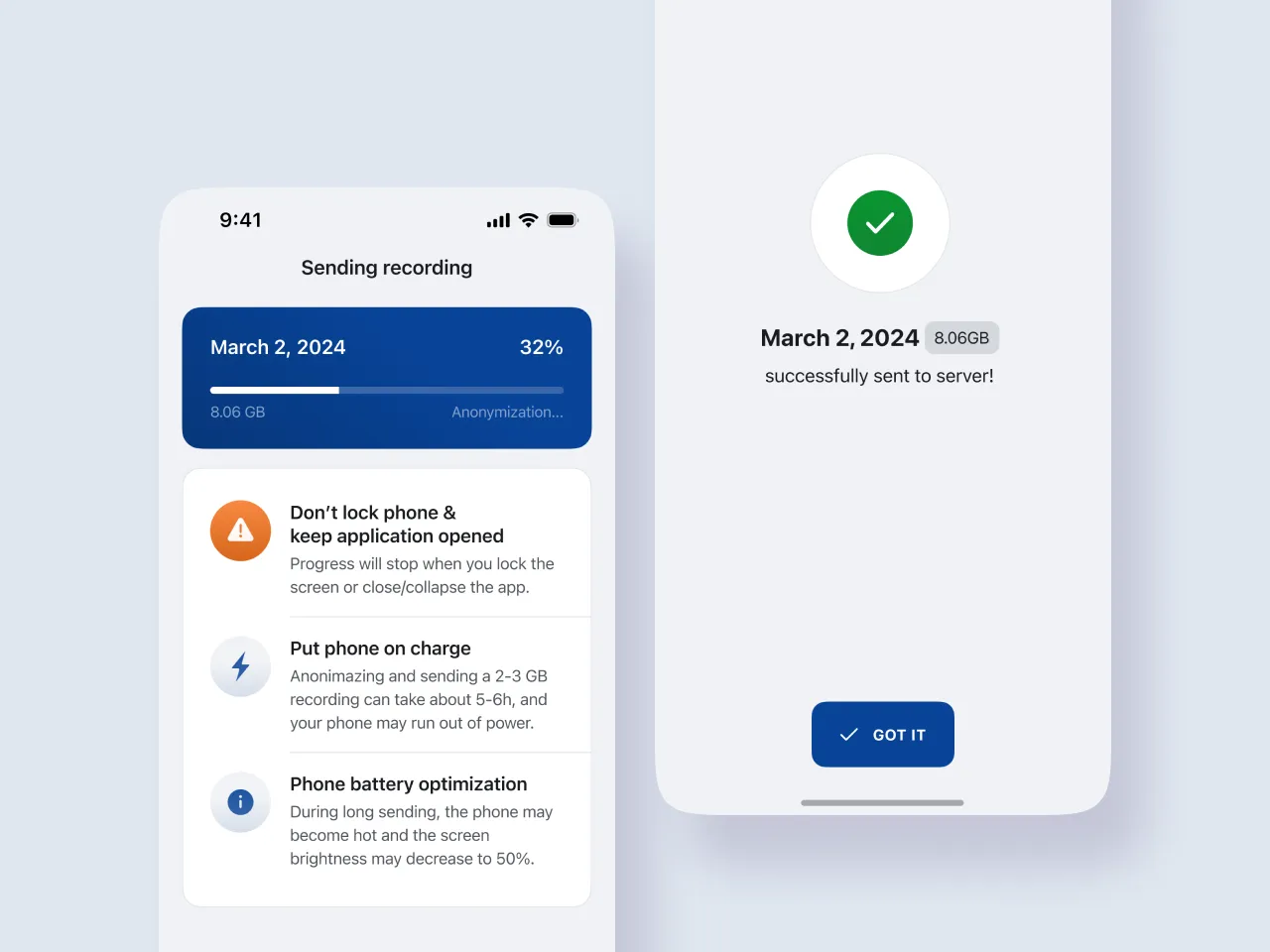

Advanced AI-powered iOS Application Integrated with Innovative Health Tech Software Platform