Chatbots and GPT in Health Apps: Human Conversational Design vs. AI

Table of contents

- How Are Chatbots and GPT Created?

- Chatbots as a Feature in Healthtech

- ChatGPT and Its Role in Healthtech

- When to Use ChatGPT in Healthcare

- Research

- Clinical documentation

- Medication management

- Telemedicine

- Remote patient monitoring

- When to Avoid ChatGPT in Health Tech

- Mental health issues

- Diagnosis

- Emergency situations

- Interpretation of medical imaging

- Takeaway

The Internet is abuzz over the introduction of ChatGPT and how everybody is using it to do homework, find answers to frequently asked questions, plan a diet, analyze large volumes of data, and even develop software. Sounds like a form of magic? In fact, ChatGPT already passes exams and coding interviews and writes essays for Cardiff students.

The technology became the fastest-growing application in the world by recording 100 million users within two months of its launch. This is because the tool can be truly beneficial for any task 一 people can use it every day to automate their work and learn new things. However, one of the most popular discussions is the potential use of ChatGPT in healthcare.

Let’s find out the role of this groundbreaking tool in health tech, discover how it differs from “time-honored” chatbots, and look at what you should and shouldn’t use it for.

How Are Chatbots and GPT Created?

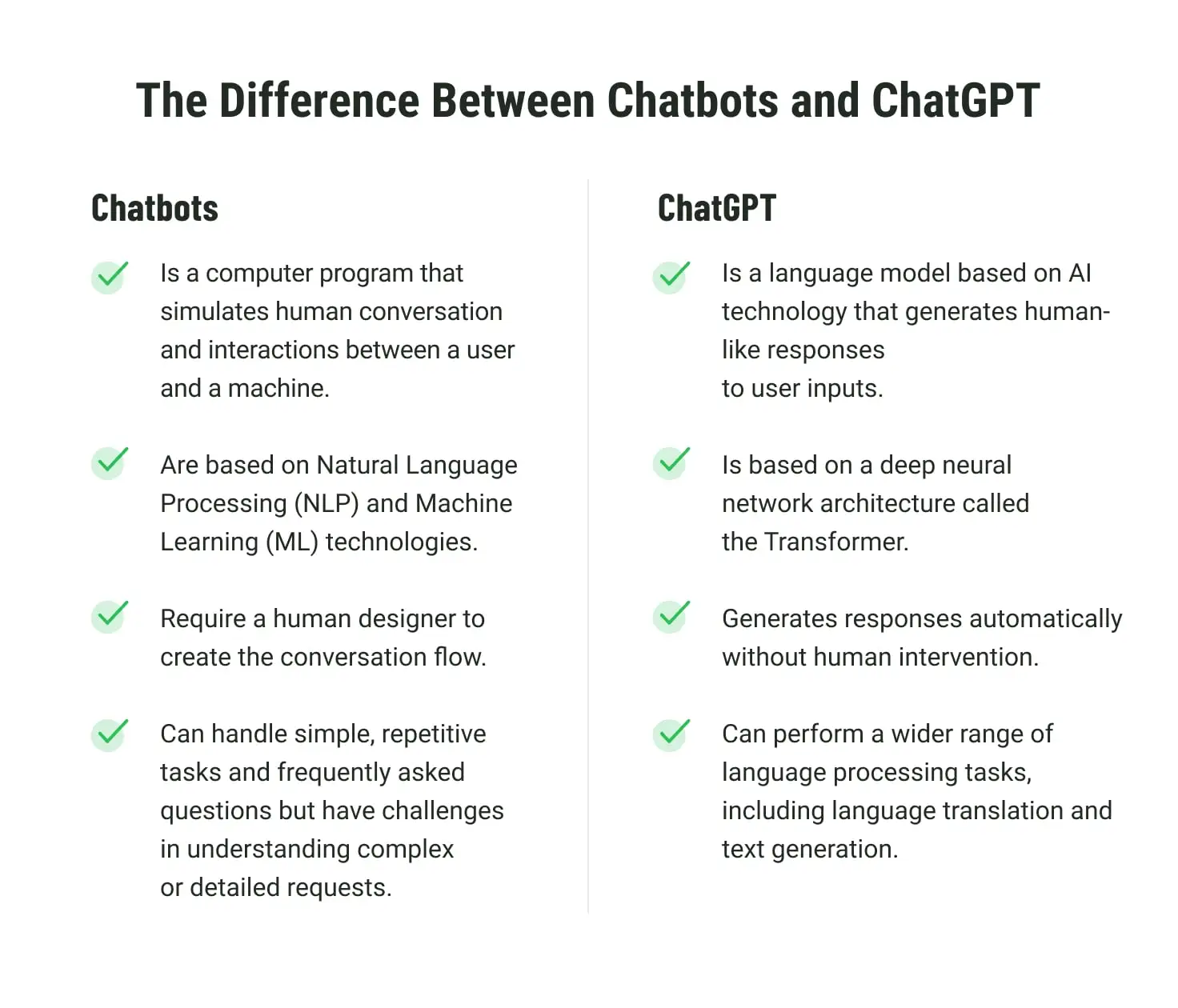

A chatbot is a type of computer program that simulates human conversations, using natural language in either text or spoken form and employing techniques. Chatbots rely primarily on Natural Language Processing (NLP) and Machine Learning (ML) technologies to understand and respond to user input.

GPT (Generative Pre-trained Transformer) is an AI language model developed by OpenAI, which is capable of processing and generating human-like text. It’s important to understand that GPT can be used to power chatbots, but it is not a chatbot itself since chatbots require additional programming and design to function as conversational agents. The main technology that lies behind GPT is a type of artificial intelligence (AI), a deep neural network architecture called the Transformer, introduced by Google in 2017.

Although both are designed to simulate conversation with human users, they have some operational differences. Chatbots can handle simple, repetitive tasks and frequently asked questions, while GPT models are more versatile and can perform a wider range of language processing tasks, including language translation and text generation. Chatbots may struggle to understand complex or detailed requests, while GPT models are well-suited for these tasks.

Read also: Assistive AI vs. Agentic AI: Understanding the Future of Intelligent Automation

Chatbots as a Feature in Healthtech

According to statistics, the healthcare chatbots market size was valued at USD 194.85 million in 2021 and is projected to reach USD 943.64 million by 2030, growing at a CAGR of 19.16% from 2022 to 2030. The COVID-19 pandemic has increased the adoption of healthcare chatbots due to their ability to provide immediate responses. Over 1000 COVID-19-specific chatbots have been launched on Microsoft's chatbot platform alone. The World Health Organization and the U.S. Centers for Disease Control have also launched COVID-19 chatbots.

Today, chatbots are no longer new and are actively being introduced in the healthcare space. It’s challenging to imagine modern healthcare apps without AI-powered chatbots and virtual assistants. People use healthcare chatbots for scheduling and monitoring appointments, receiving medical and drug information, and establishing relations between healthcare providers and potential customers.

The most popular reason why people chose chatbots is to check symptoms. In fact, one study found 89% of US citizens google their symptoms before scheduling an appointment with their doctor. The results of “researching” your symptoms are usually bad and end up giving you more anxiety. Trusted resources created by medical institutions are often littered with nomenclature and professional terms, which is confusing for the average person.

Here come AI-driven chatbots that allow you to diagnose yourself by chatting with a digital health companion through a text-based conversation. They help solve this issue by pairing the symptom diagnosis capability with a database of patient-friendly and accurate information. Some of the chatbots can even provide patients with helpful resources which are not available on the Internet.

The concerns about the introduction of chatbots were not as wide as those about ChatGPT nowadays. This is because chatbots typically have prepared scenarios and responses programmed into them, which makes their behavior predictable. They follow a specific set of rules and respond to certain prompts in a predetermined manner.

However, the world does not stand still, and today, traditional chatbots have faded into the background, as the main actor has stolen the scene 一 chatGPT. Let's take a look at how chatGPT chatbots are changing the world of healthcare.

ChatGPT and Its Role in Healthtech

The first version of GPT was introduced in 2018. Since then, several versions have been released, with the latest being GPT-4, which was introduced in 2023. The technology continues to generate a lot of buzz in the AI community due to its impressive performance on various tasks.

ChatGPT has greatly improved the usefulness of previous chatbots. Chatbots powered by GPT models can generate more human-like responses and better understand natural language and the aspects of human communication, making them more effective in providing personalized support, engaging with users, and resolving issues.

According to News Medical, one of the world’s leading open-access medical hubs, ChatGPT has many potential applications in healthcare, including facilitating the shift towards telemedicine, assisting with clinical decision support, streamlining medical record keeping, and providing real-time translation. It could also help identify patients for clinical trials, develop more accurate symptom checkers, enhance medical education, and support mental health.

Those who use ChatGPT like how they serve up information in clear sentences and can explain concepts in ways that patients and doctors can understand rather than provide lists of blue links. Many experts believe these new chatbots have the capacity to overshadow human conversational design and reinvent or even replace Internet search engines like Google.

This means that conversational designers will need to focus on creating more human-like conversational experiences, incorporating empathy and emotional intelligence to enhance user engagement and satisfaction.

Along with the admirers of chatbots like ChatGPT, there are those who consider the technology a significant threat. We tried to figure out how real this threat is and in what cases it’s worth and not worth using the GPT chatbot for medical purposes.

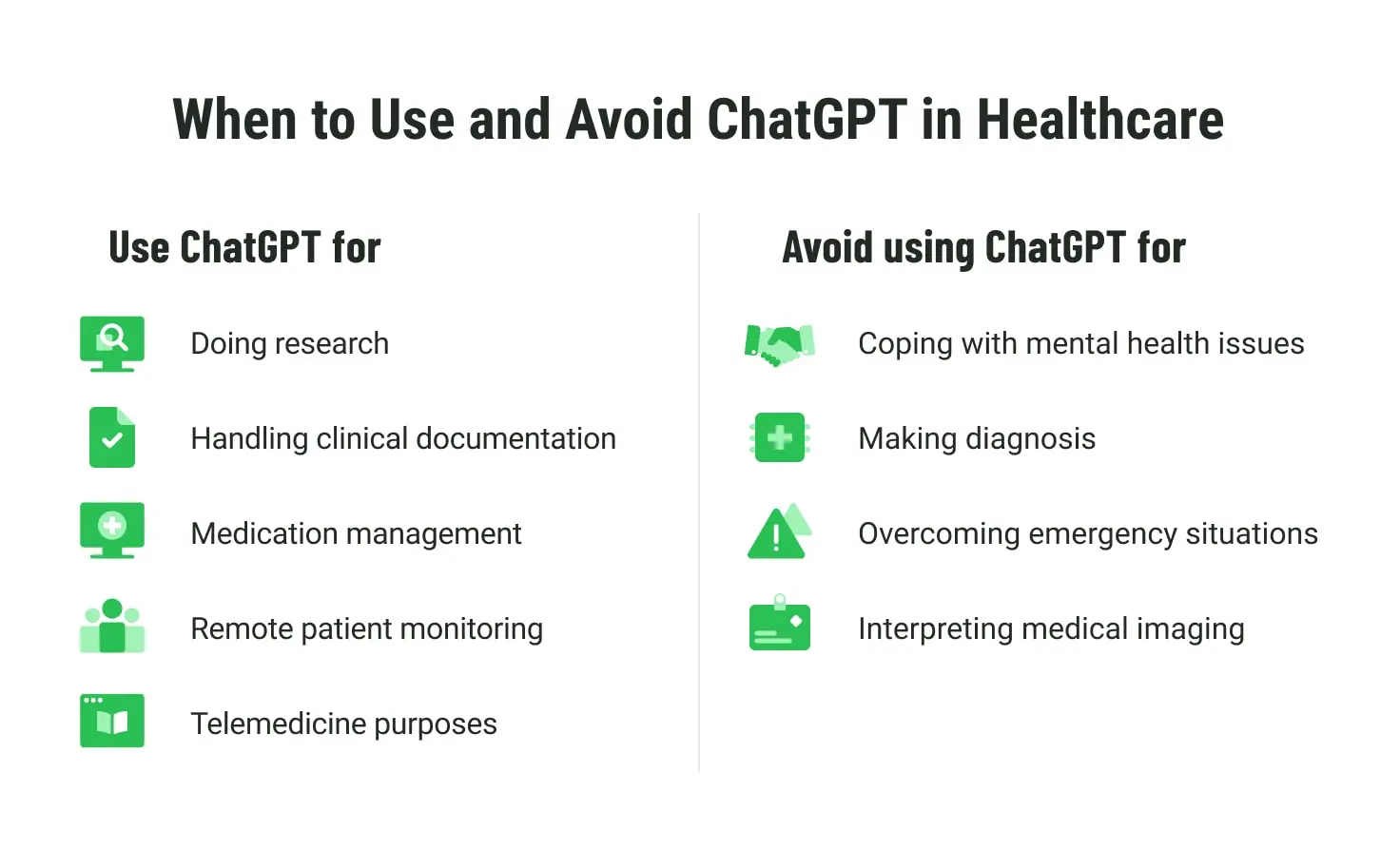

When to Use ChatGPT in Healthcare

Research

Compounding massive amounts of data and making it accessible is one of the critical features of ChatGPT. Medical workers and students can ask to compile all the latest cancer research for the last several years and look for trends and summarize the research data. GPT is a powerful tool for analyzing the data from any research or study and providing a rapid analysis or systematic review. Having this kind of data can help doctors make better decisions in hospitals and clinics.

However, some chatbot ChatGPT users notice inaccuracies in data delivered by this tool and face hallucination 一 a new term that refers to the unreliable or misleading responses generated by the AI model's inherent biases, lack of real-world understanding, or training data limitations, which can lead to outputs that are either factually incorrect or unrelated to the given context. So, in some cases, additional expert review of the information might be needed.

Clinical documentation

The tool can be trained on a data set of medical records to assist doctors and nurses in creating accurate clinical notes. For example, a doctor can dictate a patient’s data, such as symptoms, history of illnesses, and examination results, into a microphone and ask ChatGPT to create a structured note. Then, the technology can analyze all the notes and generate an analysis, saving time and increasing the efficiency of documentation.

Medication management

Managing multiple medications and adhering to dosage instructions can be challenging for patients. ChatGPT can be used to help patients manage their medications, providing dosage instructions and warning about potential side effects. Moreover, ChatGPT can inform patients about drug interactions, contraindications, and other significant factors that can influence medication management.

Telemedicine

ChatGPT is transforming the telemedicine landscape by enabling healthcare providers to have more natural and efficient conversations with patients, offering features such as automated appointment reminders and access to medical research.

ChatGPT can provide natural language responses to patient queries, monitor patient data, and provide personalized feedback to medical personnel. Its potential to reduce wait times and costs associated with healthcare services is immense, and it’s already being used in clinical settings.

Remote patient monitoring

ChatGPT can analyze data from monitoring devices, providing real-time insights into a patient's health status. It can alert healthcare providers if there are concerning trends, allowing for early intervention to prevent complications and hospitalizations.

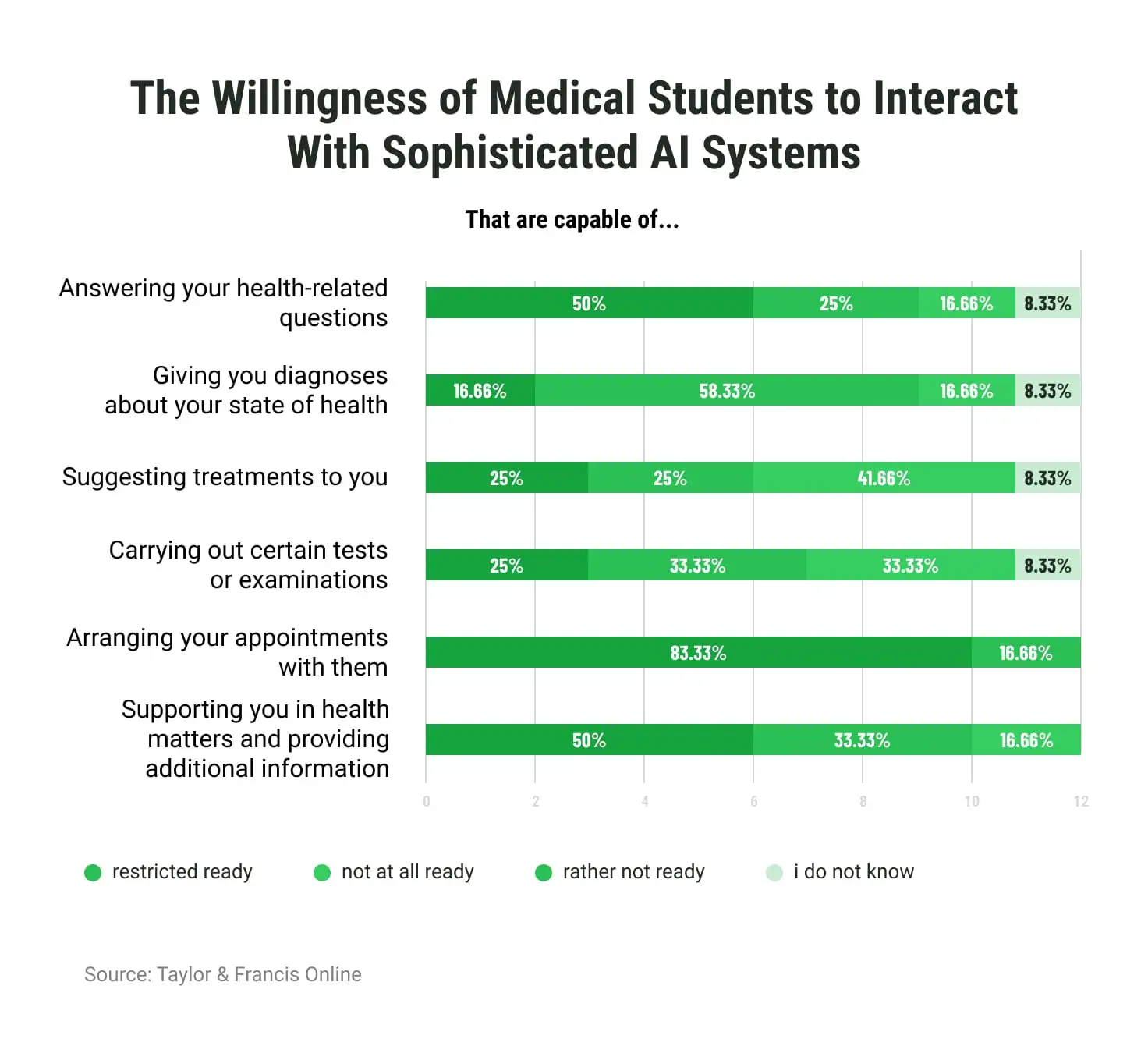

A recent survey of medical students in Germany found that future physicians are optimistic about introducing AI-based technology into their workflows. Below, you can see which tasks students would not mind delegating or sharing with AI-driven technologies.

When to Avoid ChatGPT in Health Tech

At the same time, there are several challenges and potential pitfalls of using ChatGPT that raise important questions related to medical ethics, data privacy and security, and the risk of incorrect or misleading information that could harm patients.

Mental health issues

Although ChatGPT can provide helpful information and support, it is not a substitute for professional medical or mental health care. The complexity of the job of psychologists and psychotherapists lies in the ability to administer fully integrated care by providing treatment but also compassion, a clinical skill that computer algorithms are still not able to comprehend.

Moreover, when deciding on a mental health treatment plan, not just a patient's symptoms but also their personal history and circumstances should be taken into account. For example, a patient is experiencing anxiety, and after an assessment, the doctor determines that the root cause of the anxiety is a traumatic event. In this case, the doctor may suggest therapy as a treatment option to address the underlying trauma instead of solely relying on medication.

A person themselves cannot always identify the true causes of a particular condition. Therefore, AI still has a lot to learn to become a faithful mental health virtual assistant to people who are seeking help with mental health. This is something that should be taken into consideration when developing a mental health app.

Diagnosis

Although GPT has shown promise in making diagnoses, it is still an AI conversation program that can make mistakes and provide inaccurate or incomplete information. In addition, ChatGPT may not be able to handle complex medical situations that require a deeper understanding of the patient's medical history and the nuances of their condition.

In these situations, a human healthcare professional would be better equipped to provide the necessary guidance and support.

Emergency situations

When it comes to life or death, trusting your life to an AI-driven chatbot is an extremely poor decision. ChatGPT is capable of only providing general information and guidance, but it is not intended to give medical advice or diagnosis.

In an emergency health situation, people should immediately seek professional medical assistance from a qualified healthcare provider or call emergency services in their area.

Interpretation of medical imaging

Another example where the proficiency of physicians is indispensable is understanding medical imaging. While artificial intelligence can aid in the analysis of medical images, the precise interpretation of results still relies heavily on the proficiency of human experts.

For example, a radiologist can find the differences between a benign and malignant tumor based on subtle changes in the texture and shape of the tissue, which may not be clearly apparent to AI-based technologies.

Takeaway

AI-based chatbots like ChatGPT can be used to collect patient symptoms and provide initial clinical judgments to doctors, potentially reducing wait times for specialist visits. However, ChatGPT should be seen as an extension of a doctor's clinical judgment, not a substitute. To sum up, AI has the potential to revolutionize medicine, but it cannot replace the experience of doctors and needs supervision.

If you’re thinking about the development of AI-based chatbots for your healthcare company or are interested in other software solutions, turn to Emerline. We have deep expertise in product development using cutting-edge technology, including AI, ML, VR/AR, Blockchain, etc. We offer a couple of cooperation models to meet your business needs and give our all to create a quality solution tailored to all your requirements. Book a free consultation with our experts right now!

Updated on Apr 21, 2025